Newsletter #3 - When a Language Model Sees

Trying to eliminate bias in LMs, Google plans, use LMs in robotics, and more

Hello everyone,

I just wanted to say a quick thank you for supporting my newsletter. We hit 40 followers in just 2 weeks - how awesome is that?! I couldn't have done it without all of you amazing folks. Now, let’s get to it.

What is BARD?

Google's BARD has had a bumpy ride since its inception. Initially, the tech giant criticized OpenAI's ChatGPT, claiming it was not ready for production. However, Google's initial release of BARD was a disaster, failing to deliver on its promises. Now, the company has clarified that BARD is not designed for search. However, this raises the question, what exactly is BARD meant to do? With these twists and turns in its timeline, it remains to be seen where BARD will go from here.

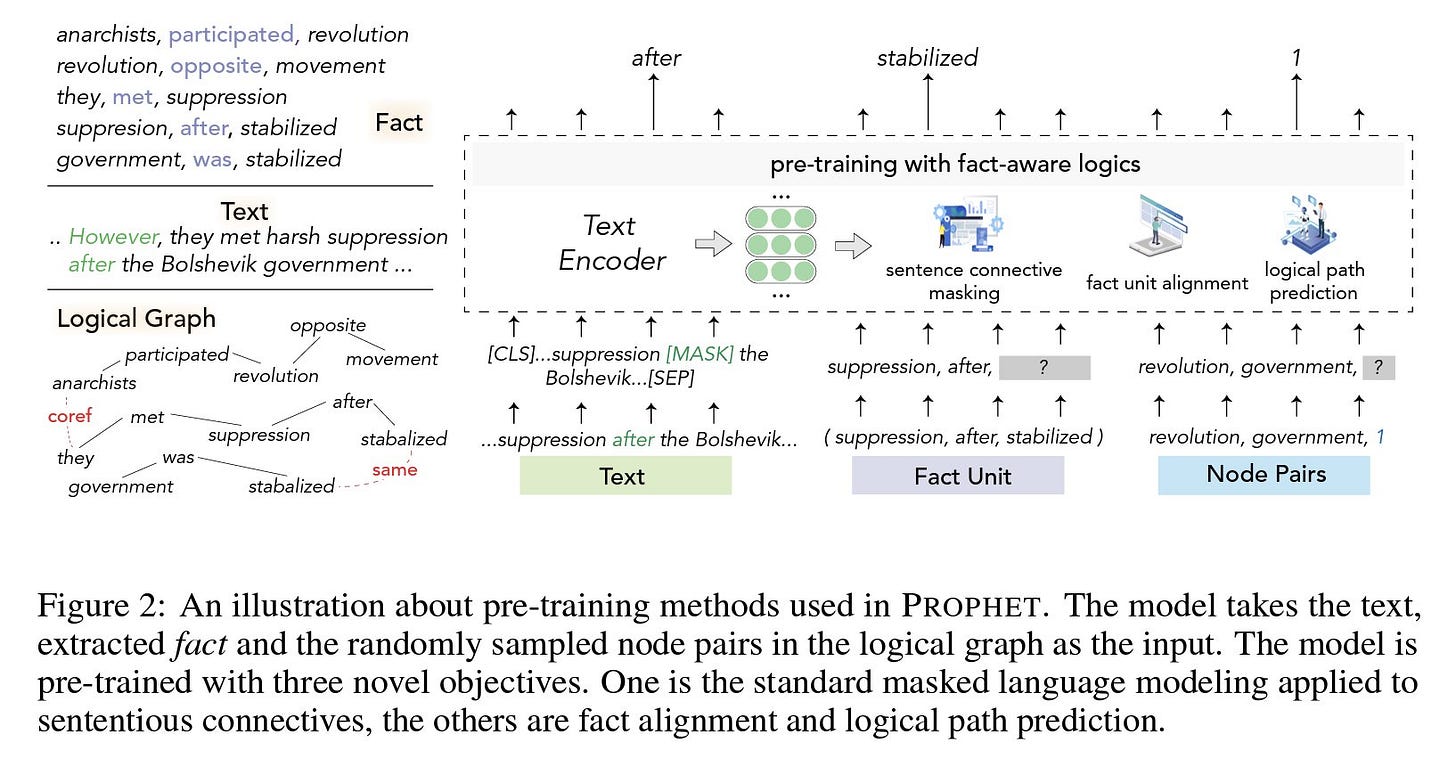

Language Models and Bias

Name a more iconic duo, right? - Researchers at MIT trained a language model intending to learn connections between sentences. (entails, contradicts, or is neutral) Their LM were smaller and showed significantly less biased output. For example, a sentence like "The person is a doctor" followed by the "The person is masculine" hypothesis. The model will predict "Neutral" because no facts in the text reveal the person's gender. (read more)

PaLM + ViT

Google introduces PaLM-E, a combination of their PaLM language and ViT vision models with 500B parameters. It can generate a plan of action for a given task to guide a robot without human instructions! They published several demos asking a robot to fetch a bag of chips or sort objects by colour.

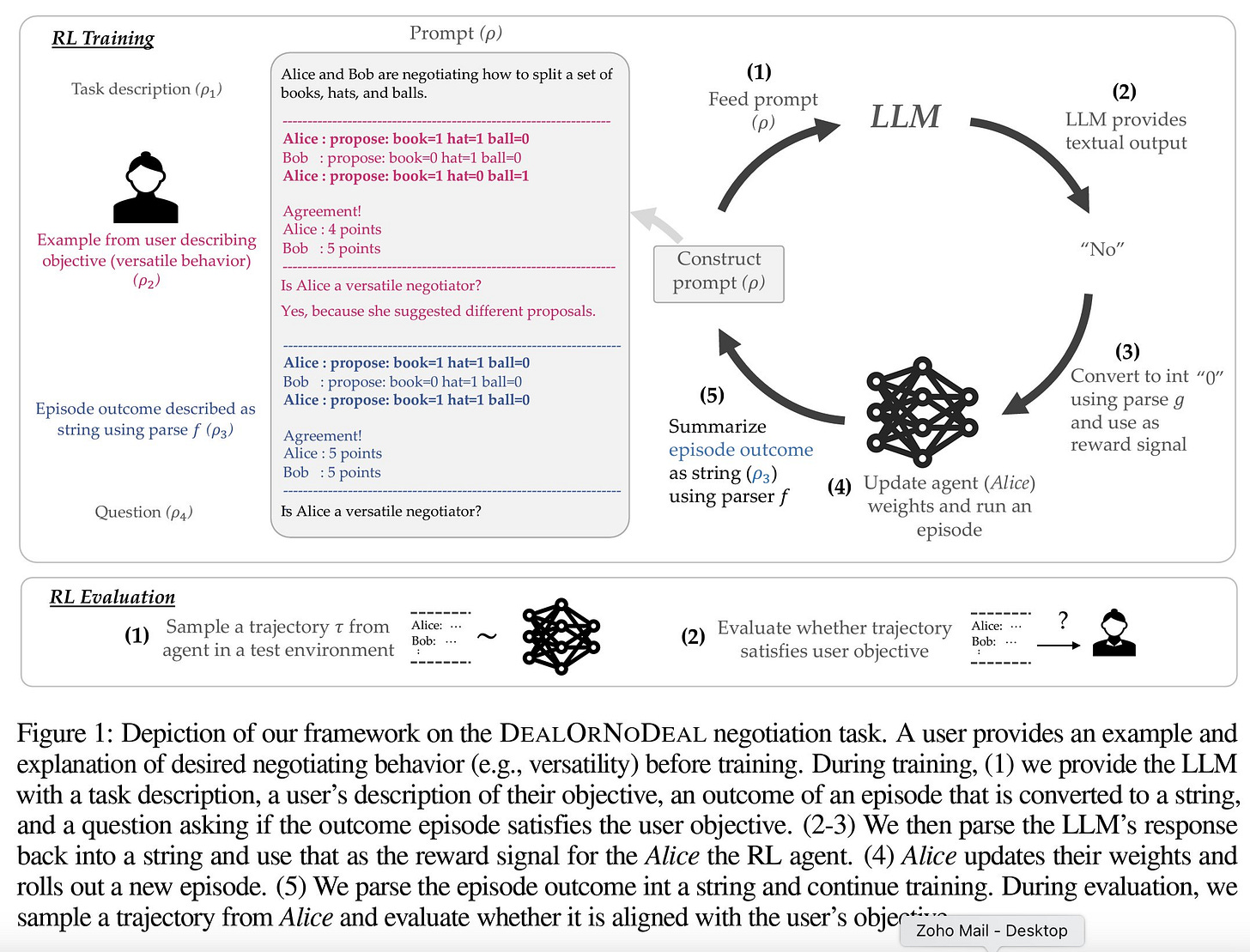

Reward with LM

Researchers at Stanford and Deepmind experimented with using GPT-3 as a proxy reward function. (paper, code) They used the LLM to guide the reward function during the training process with a prompt from the user to set their preference. This approach could perform better because a reward function only looks for a single true outcome. However, an LLM will learn from context and send a better signal even if the prediction is partially correct.

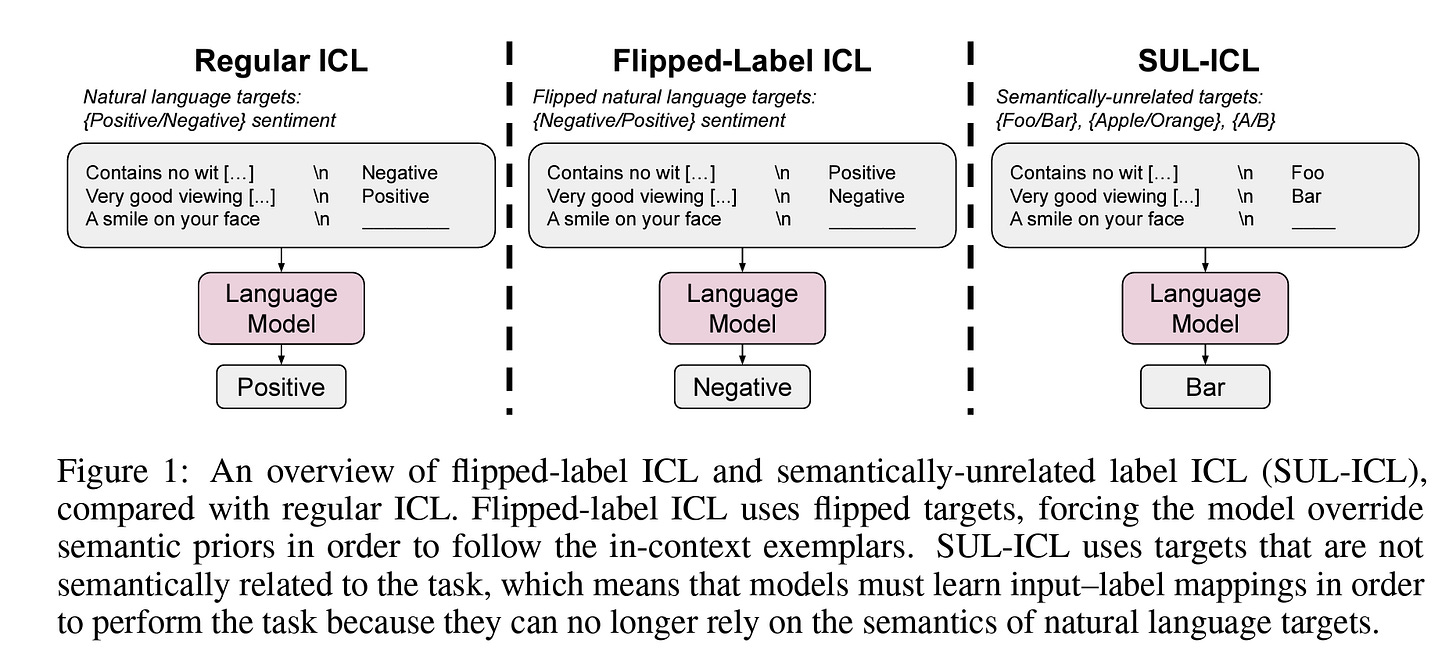

In-Context Learning

A study by Google, Stanford, and Brown university reveals that Large Language Models are better at learning and mapping labels. They perform better than small models even when the labels are unrelated to the context or flipped.

Microsoft+ChatGPT new

According to Satya Nadella, the Bing chatbot will not be the end of the Microsoft and OpenAI partnership. We will see a bit of new AI features in all their services. A report from Forbes reveals that services like MS Teams, Power BI, Power Automate, and Viva Sale are already received or will upgrade with new functionalities.

I am more excited about the Office 365 suite! However, the company didn’t say anything about it yet. A simple writing assistant (for Word) will impact how millions of people work daily!

The END,

What do you think of this newsletter? I like to hear your feedback.

What parts were interesting? What sections did you not like? What was missing, and do you want to see more of it in future?

Please reach out to me at: nlpiation@gmail.com.